Virtual Kubernetes Clusters on AWS EKS

Cheaper than real clusters and better isolated then namespaces virtual clusters on Kubernetes are a promising middle ground between the multi-cluster and multi-namespace approach

Hey folks, It’s been quiet on infrastructure as posts for a while now but we are so back!

In this year I’ll focus more on providing practical examples for all the things I’ll talk about in the form of Terraform repos for you to reproduce everything.

So the Code for this and all future setups can be found here:

https://github.com/infrastructure-as-posts

Today I want to showcase virtual Kubernetes clusters to you, more specifically we will be exploring the tool vcluster and how to use to create virtual clusters inside of an EKS cluster on AWS.

Requirements to follow along with this article:

Terraform → https://developer.hashicorp.com/terraform/install

Helm → https://helm.sh/docs/intro/install/

vcluster → https://www.vcluster.com/docs/getting-started/setup

AWS Account

Note that this does exceed the AWS free-tier and can occur charges on your AWS Account depending on how long you keep this setup up and running. Remember to always clean up (terraform destroy) after you are done with your experiments.

Find the necessary code for this exact setup here:

https://github.com/infrastructure-as-posts/vcluster-on-eks

For readability sake the code snippets in the article have been kept short and may be missing some parts, which can be found in the repository linked above.

Why virtual clusters?

Virtual clusters create an appealing middle ground between spinning up multiple real clusters and simply using separate namespaces. They are only slightly more costly than namespaces but create strongly isolated environments.

Think for example about B2B SaaS companies that might currently be spinning up a new Kubernetes cluster for each of their enterprise customers, this comes at a cost and using virtual clusters instead could lead to significant savings for them.

I can also see this being utilized for test environments, where a company might spin up and down virtual Kubernetes clusters for test environments on-demand.

How does it work?

Virtual clusters essentially turn a namespace into a cluster of it’s own. vcluster replicates the components of a standard Kubernetes cluster, such as the API server, controller manager, and scheduler. However, these components interact with the physical cluster's resources in a way that is abstracted and isolated, allowing multiple virtual clusters to coexist on the same physical infrastructure without interference, each believing it's an independent Kubernetes cluster.

As you can see, the pods exist on the Host cluster but everything else, such as

deployments

statefulsets

secrets

configmaps

…

Only exists in the virtual cluster and can’t be seen from the host cluster.

Now that you know what we are talking about, let’s setup a new virtual cluster on top of AWS EKS.

Creating a new EKS Cluster

The first thing we need for our setup is a Kubernetes cluster to act as the host cluster. We will be using the Elastic Kubernetes Services (EKS) by AWS. To setup an EKS cluster with Terraform there are two common approaches:

eks module provided by the community

At the time of writing this, the community module has over 1.2 Million downloads in the last month and is one of the most established and well maintained community modules. It makes the whole setup a lot easier and thus we will be using it here as well. If you need more fine grained control you can opt for the aws_eks_cluster resource instead.

The Terraform sample below shows that we will be creating a new VPC for the cluster and that we will have two node groups with one node in each, resulting in a cluster of two nodes in total.

locals {

cluster_name = "vcluster-eks"

}

module "vpc" {

source = "terraform-aws-modules/vpc/aws"

version = "5.0.0"

name = "vcluster-vpc"

cidr = "10.0.0.0/16"

azs = ["eu-central-1a", "eu-central-1b"]

private_subnets = ["10.0.1.0/24", "10.0.2.0/24"]

}

module "eks" {

source = "terraform-aws-modules/eks/aws"

version = "19.15.3"

cluster_name = local.cluster_name

cluster_version = "1.28"

vpc_id = module.vpc.vpc_id

subnet_ids = module.vpc.private_subnets

cluster_endpoint_public_access = true

eks_managed_node_groups = {

one = {

name = "node-group-1"

instance_types = ["t3.small"]

min_size = 1

max_size = 1

desired_size = 1

}

two = {

name = "node-group-2"

instance_types = ["t3.small"]

min_size = 1

max_size = 1

desired_size = 1

}

}

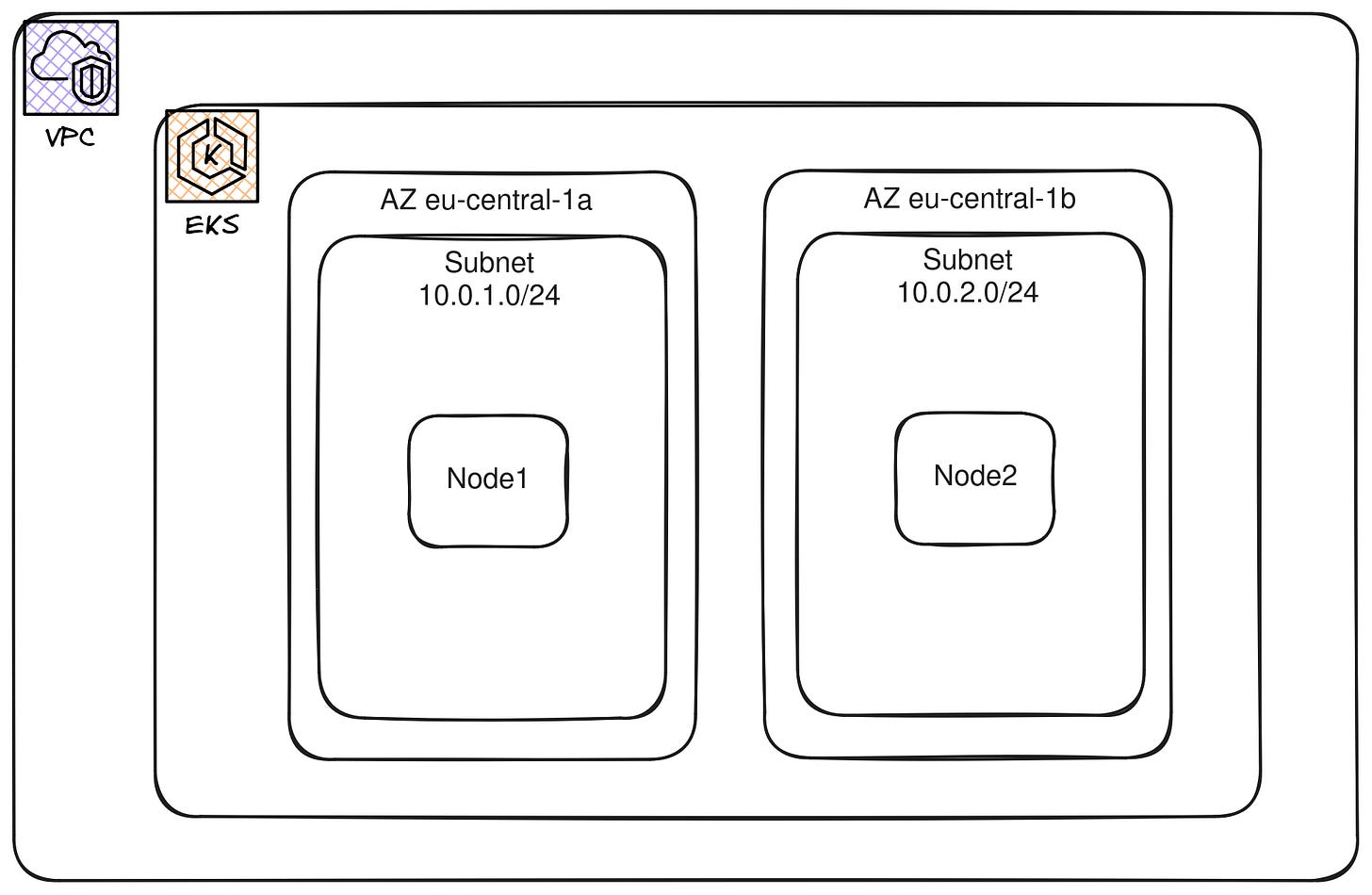

}The following diagram should help in visualizing the different encapsulation layers. The EKS cluster only exists within the boarders of the VPC, it spawns across two availability zones (eu-central-1 and eu-central-1b) and each node of this cluster will be in it’s own subnet within one of the two AZs.

Note that it is not always a given that each node will have their own subnet, if you have many nodes then many of them will also end up in the same AZs and in the same subnet.

Creating a virtual cluster inside EKS

Now that we have an EKS cluster up and running, adding vcluster to it can easily be done via a Helm chart provided by loft labs (the company behind vcluster).

resource "helm_release" "demo_vcluster" {

name = "demo-vcluster"

namespace = "vcluster"

create_namespace = true

repository = "https://charts.loft.sh"

chart = "vcluster"

version = "0.18.1"

}Note that depending on the time you are trying to replicated this setup you might have to change the version number as development around vcluster is happening rapidly and 0.19.0 is already in alpha.

Connecting to the virtual cluster

Connecting to the vcluster is straight forward, let’s first look at the available virtual clusters:

vcluster listYou should see the “demo-vcluster” up and running. The output here is very similar to the one of e.g. ‘docker ps’.

Connecting to the vcluster once it has been created is also straightforward, all you need is the name of virtual cluster.

vcluster connect demo-vclusterWhile you are connect, you kube-context has been modified and all “kubectl” commands you run from here on will be targeting the virtual cluster instead of the host cluster.

You can verify that your context has changed by running the command below

$ kubectl config current-context

vcluster_demo-vcluster_vcluster_arn:aws:eks:eu-central-1:345411212130:cluster/vcluster-eksAfter connecting to the virtual cluster we can interact with it in the same way we would do with any other Kubernetes cluster. The fact that this is a virtual cluster is completely hidden from us.

Looking at the cluster in k9s we only see a single pod running CoreDNS

Conclusion

Currently virtual clusters seem like a promising technology to me and I could see it being heavily utilized for testing in the future, especially in the context of CI/CD pipelines. They allow for isolated testing environments that closely mimic production settings, enabling more accurate testing and faster deployment cycles. Furthermore, virtual Kubernetes clusters contribute to efficient resource utilization, reducing the cost associated with physical infrastructure.

Closing words

I hope you got some value out of this and consider leaving your email address so that you don’t miss out on any of my future posts. I am also just a human who makes mistakes, so if you think anything here is wrong, please let me know.

If you want to encourage me to keep doing this you can buy me a coffee.